All you Need is... Optimization!

A new perspective on Machine Learning. This is how I discovered how Machines can Learn.

Hello, Hypers!

Even before I started to study Computer Science, I was wondering how machines can learn. At first, I thought it was not even possible and that all this AI thing was just a trick to write sci-fi novels. Then I learned to write my first programs and I imagined that Machine Learning was all about large programs with thousands of “if“ and “else” statements that covered all possible cases.

In that spirit, I tried to create a perfect Tic-Tac-Toe player. Then, I realized how many “if” this simple game would need. What about chess? But I just assumed I didn’t know how to program correctly and that there were some clever methods to code these programs. Also, I thought that probably these programs just required a big team and a lot of time.

For me, it was impossible that a machine could learn. I hypothesized with many possibilities but they always were based on a human or a group of humans including all the necessary knowledge and the machine just executing a set of rules.

Some years later I learned that there was a time in which AI was exactly this. They called it “Expert Systems”. In practice, expert systems are just sets of rules defined by humans. These systems were very famous in the 80’s.

However, one day I started to study Computer Science. It seemed that all the teachers there knew their stuff. And many of them said to us that machines can effectively learn. This was a surprise! Then, there are programs that are able to improve with the past of time. They behave differently from one day to the next. How is that possible? Is the code of the program changing each day?

I was confused, and I wanted answers.

All Started with a Function

Luckily, I liked math a lot. That’s why I felt comfortable talking about functions. We can think of any task as a function that takes an input and produces an output.

Saying whether a picture has a hot dog or not is a function that takes a picture as input and produces “Yes“ or “Not“ as output. Summarizing a text is another function that takes a text as input and produces a summary which is also a text. Building a desk from Ikea is a function that takes all the parts and screws from Ikea and does its best to produce a desk that resists the weight of your computer.

I was comfortable thinking this way. I didn’t care about what is the mathematical representation of a picture, a text, or a desk. I was happy to know that all these tasks can be treated as some specific function. I felt I was getting closer to knowing how machines learn.

The key here is that computer programs can also be defined as functions. Then, I had a common language to connect tasks that required learning and computer programs! We convert the tasks into a function, then we make a program that is equivalent (or a close approximation) to that function.

The good thing about this approach is that I’m not thinking anymore about a fixed set of rules. Instead, I’m thinking about functions. When I define a function, I get an output from all possible inputs. For example, you can give any Ikea desk parts to my function, then my function will produce something similar to a desk. Maybe it won’t be the perfect desk, but at least now I have an idea of how to create programs that do things beyond what I initially expected.

With this mindset shift, I was ready to discover the real deal: the learning part. Now it was clear that the learning was about discovering the best possible function to complete the task successfully. What is the best function to tell whether a picture has a hot dog? My machine had a lot to learn…

The Big Aha! Moment

It was the very first class of the second semester of my third year at the University. The topic: “Least Squares Method (LSM)“. To be honest, I wouldn’t be in that class so early in the morning, but it was the first day and I thought it was a good idea to play safe with the new teacher.

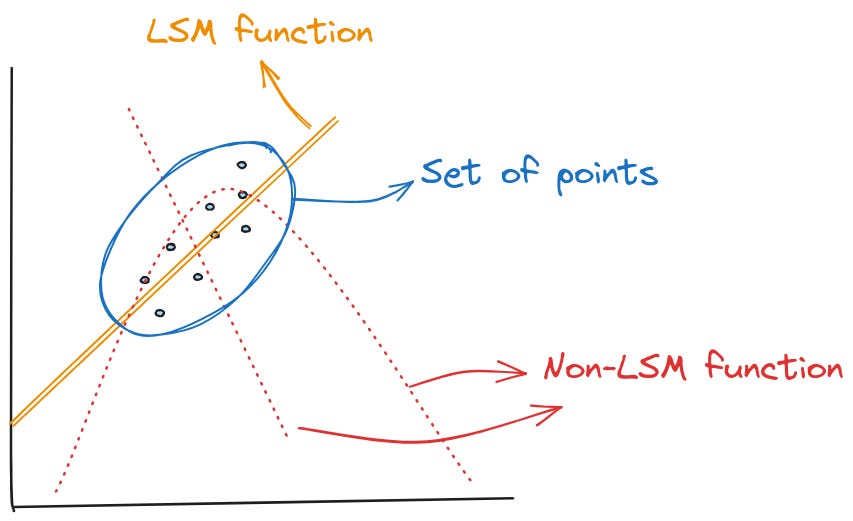

However, I got motivated very quickly. She explained that the Least Squares Method allows us to find the best-fitting curve through a set of data points. That means that this is the curve that minimizes the distance with a fixed set of points. Then I opened my eyes. Eureka! This is it! This is how machines learn.

Let’s add just a little bit of abstraction. The set of points is my training data. We are talking about points and curves with any number of dimensions. A point can be a picture and the answer to whether that picture has a hot dog or not. A point can also be the Ikea desk part and the finished desk. Then, we find a function that produces points like those in the set but also produces them very close to that set.

For example, if the set has points of the form (image, yes) or (image, not), which represents whether an image has a hot dog or not. Then my function f(image) will produce “yes“ or “not“ as output. But, as I found it by the LSM, the points (input, output) = (image, yes/no) produced by my function are very close to the ones in the set. Thus, I’d expect my function to give reasonable answers.

The function found by the LSM method is a better approximation to the function that generates the initial set of points (training data).

Furthermore, the LSM has another implicit input besides the initial set of points: the function space. This is the set of possible functions I will consider. Then I will choose the best one from the space by doing some optimization.

This could seem quite disappointing. Considering just a fixed set of functions is not too different from considering a fixed set of rules. But it is! The cool thing here is that we can define an infinite set of functions with almost no effort. Then we let optimization do all the dirty work.

For example, we can consider all linear functions. That means the set of functions of the form f(image) = A*image + b. And then, from all infinite combinations of A and b we find the one that better fits our initial set of points.

What was Left

I learned how machines can learn, right? Well, there was a missing part. Maybe for “toy problems” I knew how to find the best functions by calculating some derivatives. But what about the complex stuff like image classification or natural language processing?

I needed to learn how to represent things like texts, images, and desks mathematically. But, even more important (and hard) I needed to know how to solve optimization problems with these representations.

In the end, Machine Learning is almost all about Optimization. To fully understand what can be done and what can be hard to achieve, you need to know Optimization. Even further, the optimization mindset is key to feeling confident in Machine Learning and in many other fields of Engineering and Computer Science.

If you are interested in getting a good knowledge of optimization that allows you to improve your problem-solving skills, you might consider a new course I wrote for Educative. This is a platform with text-based interactive courses that allow you to learn without having to switch your attention or pause to practice what you learned. You do it all in the same place.

In my course Mastering Optimization with Python, I pursue two main goals:

Make you acquire the Optimization Mindset

Arm you with many practical tools to solve almost any optimization problem

As a side effect, you will polish your Python skills and maybe learn to use many libraries and tools for Optimization.

Conclusions

In this post, I wanted to tell you how I discovered Machine Learning. I don’t know if you resonate with some parts of this story but I hope it has given you another perspective to approach Machine Learning (and maybe human learning too).

As I said, Optimization was the key for me to deal with all kinds of Machine Learning problems, and also to debug my solutions. As a side effect, optimization has armed me with many other tools that I use every day even in no-ML tasks. It even influences things like my political position! (not joking)

If you like Under the Hype and want to learn more about optimization, I recommend my interactive course Mastering Optimization with Python.

And that’s it! I hope you have enjoyed this new issue. Please, let me know your thoughts by replying, and feel free to share it with your gang! Thanks a lot for another week.

See you next Tuesday!